Introduction

Welcome to part 3 of Neural Network Primitives series where we continue to explore primitive forms of artificial neural network. In this 3rd part we will discuss about Sigmoid Neuron which is the next upgrade from Perceptron that we saw in part 2.

In part 2, we saw how Perceptron was the first true primitive form of neural network which had machine learning power and had more capabilities than its predecessor McCulloch-Pitts neuron, which we saw in part 1.

- Also Read- Neural Network Primitives Part 1 – McCulloch Pitts Neuron Model (1943)

- Also Read- Neural Network Primitives Part 2 – Perceptron Model (1957)

-

Also Read- Neural Network Primitives Final Part 4 – Modern Artificial Neuron

In this post we will understand drawback of Perceptron and see how Sigmoid Neuron takes everything to the next level.

Limitation of Perceptron

Strict Activation Function

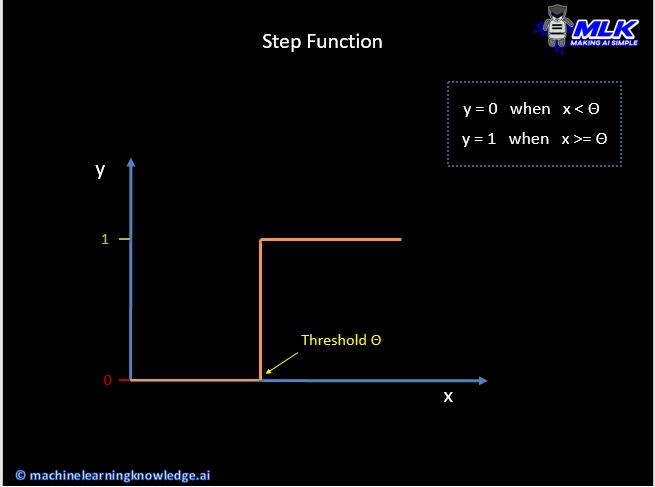

Perceptron uses Step function as it’s activation function. If we have a quick look at this step function, we will see that there is a sudden change in decision from 0 to 1 at the threshold position.

To interpret this with a real life example, let us assume that there is a trained precpetron which predicts if a person is likely to purchase a house based on its yearly income.

If the threshold is $50.4K, then it means even if a person has yearly salary of $50.3K or even $50.39K the perceptron will say that this person can’t buy a house.

Binary Output

Another effect of step function is that the output of perceptron is always binary i.e. 0 or 1. Usually 0 or 1 is interpreted as False or True , No or Yes. But not all real world problems looks for binary answers like Yes or No.

A new activation function – Sigmoid Function

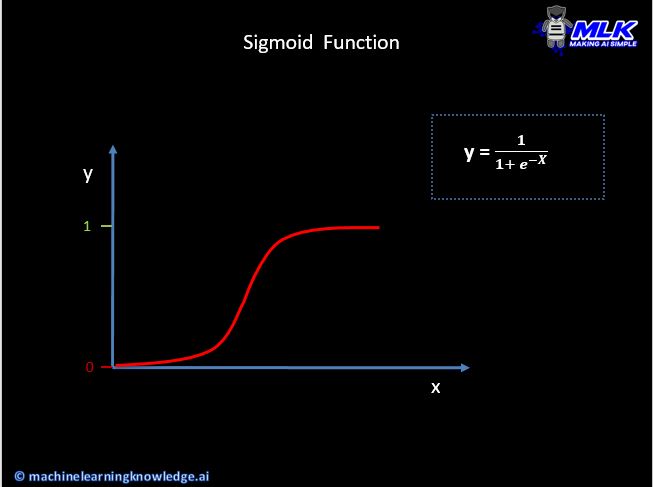

As you noticed above that the root cause of Perceptron is the activation function itself. We need to upgrade our choice of activation function to address these shortcomings. Meet Sigmoid function !!

- If you look at the nature of Sigmoid function below you will observe that this function does not have a sudden spike from 0 to 1. Instead there is a smooth transition between 0 to 1.

- The value of y is not restricted to binary 0 or 1. Instead it can assume any real value between 0 and 1.

Sigmoid Neuron

Sigmoid neuron is an artificial neuron that has sigmoid activation function at it’s core. This is similar to Perceptron but instead of a step function it has sigmoid function.

This change in activation function actually is an upgrade from Perceptron and addresses its shortcomings that we had discussed above. Before we understand this, let us understand the anatomy of Sigmoid Neuron.

Below are various parts of Sigmoid Neuron–

- Inputs

- Bias

- Weights

- Neuron

- Output

Inputs

The inputs to sigmoid neuron can represent any real number.

Bias

Bias of a neuron, is an additional learning parameter which helps to generate offset in the activation function. Imagine this like, bias helps to move the graph of activation function across to better fit data. Bias is not dependent on the input.

Weights

Each input has a weight associated with it in sigmoid neuron. These weights are initially unknown but are learnt during training phase.

For ease of mathematical representation, bias is considered as weight whose input value is 1.

Neuron

Neuron is a computational unit which has incoming input signals. The input signals are computed and an output is fired. The neuron further consists of following two elements –

Summation Function

This simply calculates the sum of incoming inputs multiplied by it’s respective weights.

Activation Function

Here the activation function used is the sigmoid function, that we have discussed above.

Output

This is simply the output of the neuron which can represent any value between 0 and 1.

How Sigmoid Neuron works

After understanding the anatomy, let us now see how sigmoid neuron (which is already trained) works.

- The input data is enabled into the Sigmoid neuron.

- Apply dot product between input & it’s respective weights and sum them up.

- Apply sigmoid function on above summation and produce an output between 0 and 1.

Why Sigmoid Neuron is an upgrade of Perceptron?

To understand this more better let us revisit the problem of predicting if a person will purchase a house based on its yearly income. Now if this prediction is made by a sigmoid neuron it will not answer this by 0 or 1 (i.e. no or yes). Instead it will output real numbers between 0 and 1.

There is another type of prediction that falls between 0 and 1, Probability !!

So Sigmoid neuron helps in getting the probability of something rather than a strict yes or no decision. This also solves the problem where there was a sudden change in decision at a specific threshold value.

The difference between Perceptron and Sigmoid Neuron can be visualized below.

Some Points to Consider…

- Sigmoid neuron introduces a degree of non linearity in decision boundary which is in contrast with perceptron that only works with linearly separable data. Though a single sigmoid neuron is not enough to work on all types of non linear data.

- Sigmoid neuron can be trained using gradient decent technique, which is a very popular learning technique of machine learning. This requires a separate article of it’s own for us to understand gradient descent.

- Sigmoid neuron though answers the drawbacks of Perceptron but it is no longer a favaorable neuron to work with in today’s modern deep learning era. This is because it carries with itself the problem of vanishing gradient descent.

In the End…

I hope this was a good entertaining read which helped you to understand how we have taken ourselves to next level from a Perceptron.

With this we end the 3rd part of our series Neural Network Primitives where we have been exploring early primitive forms of modern deep learning.

- Also Read- Neural Network Primitives Part 1 – McCulloch Pitts Neuron Model (1943)

- Also Read- Neural Network Primitives Part 2 – Perceptron Model (1957)

- Also Read- Neural Network Primitives Final Part 4 – Modern Artificial Neuron

Do share your feed back about this post in the comments section below. If you found this post informative, then please do share this and subscribe to us by clicking on bell icon for quick notifications of new upcoming posts. And yes, don’t forget to join our new community MLK Hub and Make AI Simple together.