Introduction

In this article, we will take a look into the GoogleNet architecture which is a Deep Learning based state-of-the-art image classification model. Then we will do an implementation of a minimalistic version of GoogleNet in Keras by using the CIFAR-10 dataset for the training purpose.

What is GoogleNet?

Developed by the Google research team, GoogleNet is a 22 layer deep, deep convolutional network for image classification. This model was the winner of ILSRVRC 2014 with an error rate of 6.67%. It achieved a top-5 93.3% accuracy on the ImageNet dataset which was significantly high at that time.

- Also Read – 7 Popular Image Classification Models in ImageNet Challenge (ILSVRC) Competition History

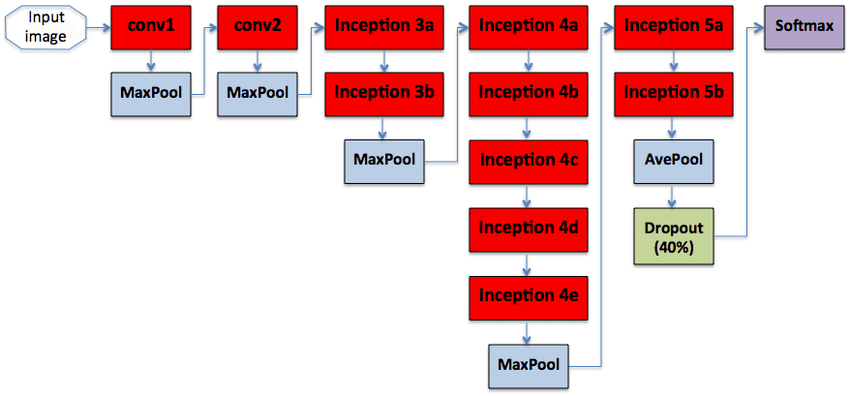

Architecture of GoogleNet

The model architecture is compact compared to other models like Alexnet, VGG, and Resnet. The main difference in this architecture is that it does not use multiple dense layers but instead employs pooling layers with small filters. This in turn while maintaining the depth of the neural network greatly decreases the computation required.

The new wave of deep learning image classification models has seen the usage of network-in-network modules. This essentially means the usage of a predefined collection of layers (in the form of a function) which is already tried and tested for enhancing training or making the model efficient. This is what makes the GoogleNet model so versatile. It uses a network-in-network module called the Inception Module.

The original model takes images of size 224x224x3 as input, has filters ranging from 1×1 to 5×5, ReLU activation for convolutional layers, and dropout layers for regularization.

(We will be making our own version of the original model so that it can be trained on the CIFAR-10 dataset.)

The original model representation can be seen below:

Inception Module

The general idea behind the inception module is to create an architecture where the input can be passed through different types of layers at once. In order to extract distinct features parallelly and finally concatenate them later. This is done so that the model can learn both local and abstract features which in turn enhances model performance.

In the actual model proposed in the paper, the inception module branches into four distinct paths.

- The first path learns local features using a convolutional layer with 1×1 filters

- The second path first applies 1×1 convolutions for dimensionality reduction. In order to prepare the input to be passed through 3×3 convolutions.

- The third path is the same as the second one. The only difference is that this time we use 5×5 convolutions. Both the second and the third branches are tasked with learning the general features in images.

- This is known as a pool projection branch. It applied 3×3 max pooling before learning features using a 1×1 convolutional layer.

These branches apply operations on the same input(same in value, not the same instance) parallelly and are later concatenated. In order to ensure that the concatenation of outputs can be performed, the same padding is used across the module.

CIFAR-10 Dataset

For our GoogleNet implementation in Keras, we will be using the CIFAR-10 dataset to train the model.

CIFAR-10 dataset is a famous computer vision dataset that contains 60,000 images of size 32×32. It has a total of 10 distinct classes namely airplanes, cars, birds, cats, deer, dogs, frogs, horses, ships, and trucks. It is used as a benchmark dataset for image-based computer vision machine learning models.

GoogleNet Implementation in Keras

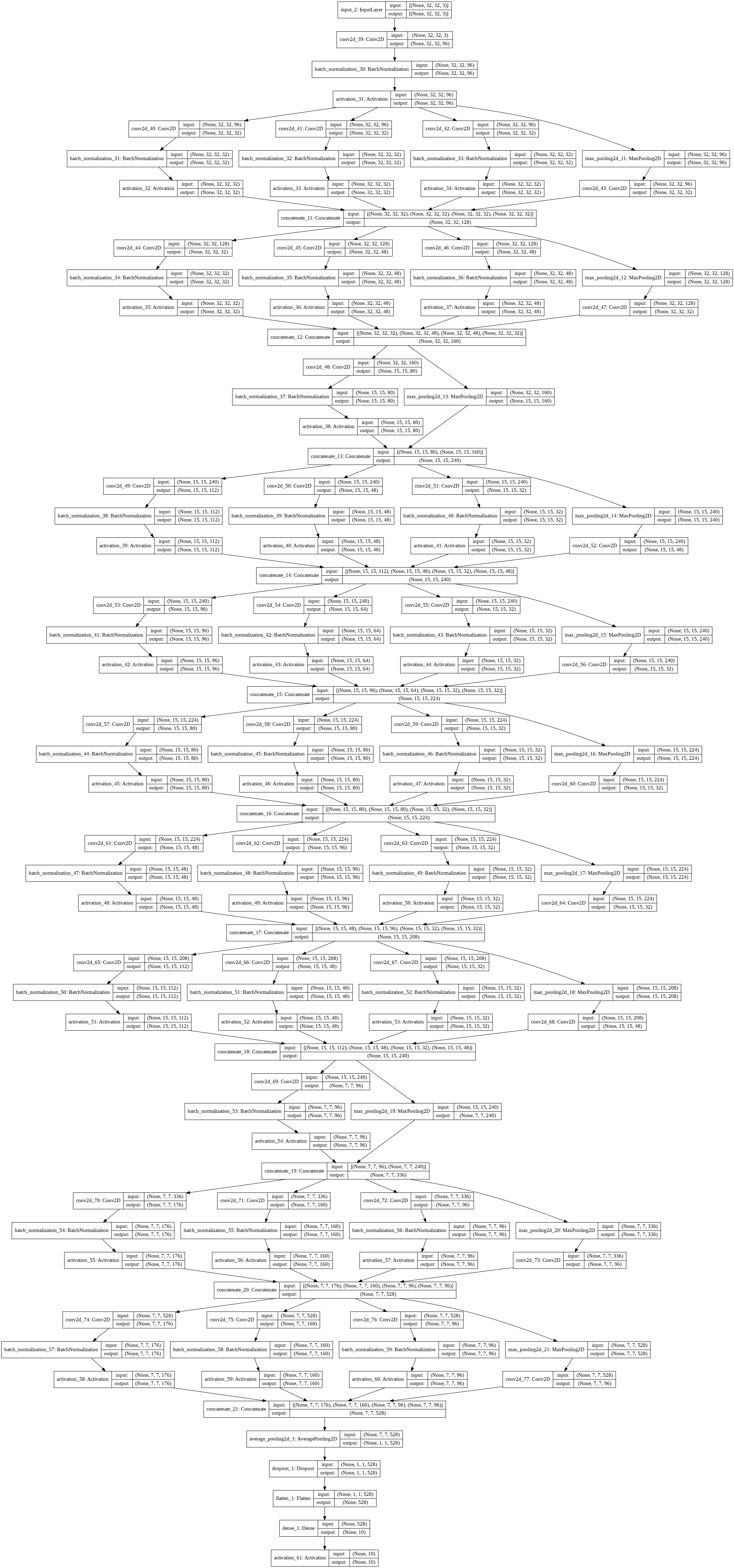

We will be implementing the below-optimized architecture of GoogleNet so that it can be fit to the CIFAR-10 dataset. (To view the below image properly you can right click and save it to your system and then view in full size)

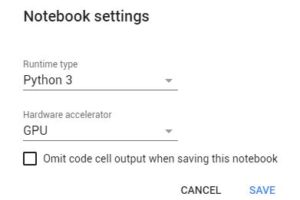

i) Setting up Google Colab

Google Colab is a free GPU supported online Notebook service provided to us by Google. It is a great alternative if you don’t have GPU in your workstation.

In order to make sure Google Colab is using the GPU, go to the ‘runtime’ section –> ‘change runtime type’ and choose the hardware accelerator to GPU.

ii) Importing the Libraries

We will start by importing all the libraries mentioned below:

from keras.layers.normalization import BatchNormalization

from keras.layers.convolutional import Conv2D

from keras.layers.convolutional import AveragePooling2D

from keras.layers.convolutional import MaxPooling2D

from keras.layers.core import Activation

from keras.layers.core import Dropout

from keras.layers.core import Dense

from keras.layers import Flatten

from keras.layers import Input

from keras.models import Model

from keras.layers import concatenate

import matplotlib

matplotlib.use("Agg")

%matplotlib inline

from sklearn.preprocessing import LabelBinarizer

from keras.preprocessing.image import ImageDataGenerator

from keras.callbacks import LearningRateScheduler

from keras.optimizers import SGD

from keras.datasets import cifar10

import numpy as np

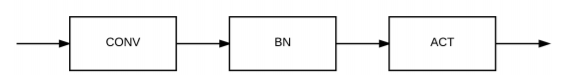

iii) Convolutional Module Implementation

We construct the ‘conv_module’ which is a series of convolutional and a batch normalization layer that is ultimately passed through a Relu activation.

def conv_module(input,No_of_filters,filtersizeX,filtersizeY,stride,chanDim,padding="same"):

input = Conv2D(No_of_filters,(filtersizeX,filtersizeY),strides=stride,padding=padding)(input)

input = BatchNormalization(axis=chanDim)(input)

input = Activation("relu")(input)

return input

Arguments:

- Input: Input to be processed

- No of filters: No of filters that should be in the Conv2D layer.

- FilterX and FilterY: Size of the filters.

- Stride

- Channel dimension

- Padding: Predefined to ‘same’ for the whole model.

iv) Inception Module Implementation

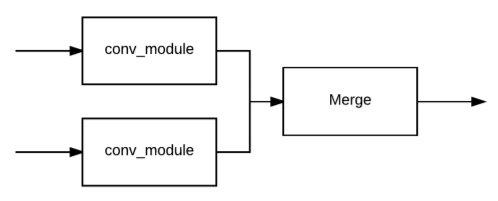

We define our modified inception module by using two conv_modules. The first module is initialized with:

- 1×1 filters(used to learn local features in images).

- The second and third with 3×3 and 5×5 respectively(responsible for learning general features).

- Define the pool projection layer using a global pooling layer.

- After that, we concatenate the layer outputs along the channel dimension(chanDim).

def inception_module(input,numK1x1,numK3x3,numk5x5,numPoolProj,chanDim):

#Step 1

conv_1x1 = conv_module(input, numK1x1, 1, 1,(1, 1), chanDim)

#Step 2

conv_3x3 = conv_module(input, numK3x3, 3, 3,(1, 1), chanDim)

conv_5x5 = conv_module(input, numk5x5, 5, 5,(1, 1), chanDim)

#Step 3

pool_proj = MaxPooling2D((3, 3), strides=(1, 1), padding='same')(input)

pool_proj = Conv2D(numPoolProj, (1, 1), padding='same', activation='relu')(pool_proj)

#Step 4

input = concatenate([conv_1x1, conv_3x3, conv_5x5, pool_proj], axis=chanDim)

return input

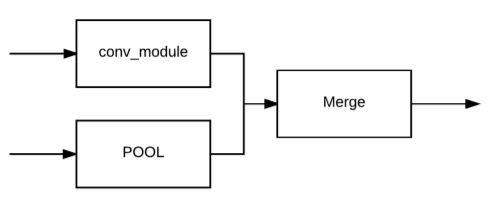

v) Downsample Module Implementation

As we could see in the architecture which is supposed to be built above the model is very deep. Thus it requires downsampling so that the no of trainable parameters can be controlled. For that function, we use a downsample_module. It is basically the concatenation of the output of a max-pooling layer and a conv_module(with 3×3 filters).

def downsample_module(input,No_of_filters,chanDim): conv_3x3=conv_module(input,No_of_filters,3,3,(2,2),chanDim,padding="valid") pool = MaxPooling2D((3,3),strides=(2,2))(input) input = concatenate([conv_3x3,pool],axis=chanDim) return input

vi) Model Implementation

- Define an input layer with the width, height, and depth parameters of the function.

- Use two inception modules along with a downsampling module.

- Use Five inception modules along with a downsampling module.

- Use two inception modules with pooling and dropout.

- Flatten layer, with a dense layer(with no of units equal to no of classes) along with an activation layer with softmax classifier.

def MiniGoogleNet(width,height,depth,classes): inputShape=(height,width,depth) chanDim=-1 # (Step 1) Define the model input inputs = Input(shape=inputShape) # First CONV module x = conv_module(inputs, 96, 3, 3, (1, 1),chanDim) # (Step 2) Two Inception modules followed by a downsample module x = inception_module(x, 32, 32,32,32,chanDim) x = inception_module(x, 32, 48, 48,32,chanDim) x = downsample_module(x, 80, chanDim) # (Step 3) Five Inception modules followed by a downsample module x = inception_module(x, 112, 48, 32, 48,chanDim) x = inception_module(x, 96, 64, 32,32,chanDim) x = inception_module(x, 80, 80, 32,32,chanDim) x = inception_module(x, 48, 96, 32,32,chanDim) x = inception_module(x, 112, 48, 32, 48,chanDim) x = downsample_module(x, 96, chanDim) # (Step 4) Two Inception modules followed x = inception_module(x, 176, 160,96,96, chanDim) x = inception_module(x, 176, 160, 96,96,chanDim) # Global POOL and dropout x = AveragePooling2D((7, 7))(x) x = Dropout(0.5)(x) # (Step 5) Softmax classifier x = Flatten()(x) x = Dense(classes)(x) x = Activation("softmax")(x) # Create the model model = Model(inputs, x, name="googlenet") return model

You can look at the model architecture by creating a model instance and using the summary function with it.

Output:

Model: "googlenet" __________________________________________________________________________________________________ Layer (type) Output Shape Param # Connected to ================================================================================================== input_1 (InputLayer) [(None, 32, 32, 3)] 0 __________________________________________________________________________________________________ conv2d (Conv2D) (None, 32, 32, 96) 2688 input_1[0][0] __________________________________________________________________________________________________ batch_normalization (BatchNorma (None, 32, 32, 96) 384 conv2d[0][0] __________________________________________________________________________________________________ activation (Activation) (None, 32, 32, 96) 0 batch_normalization[0][0] __________________________________________________________________________________________________ conv2d_1 (Conv2D) (None, 32, 32, 32) 3104 activation[0][0] __________________________________________________________________________________________________ conv2d_2 (Conv2D) (None, 32, 32, 32) 27680 activation[0][0] __________________________________________________________________________________________________ conv2d_3 (Conv2D) (None, 32, 32, 32) 76832 activation[0][0] __________________________________________________________________________________________________ batch_normalization_1 (BatchNor (None, 32, 32, 32) 128 conv2d_1[0][0] __________________________________________________________________________________________________ batch_normalization_2 (BatchNor (None, 32, 32, 32) 128 conv2d_2[0][0] __________________________________________________________________________________________________ batch_normalization_3 (BatchNor (None, 32, 32, 32) 128 conv2d_3[0][0] __________________________________________________________________________________________________ max_pooling2d (MaxPooling2D) (None, 32, 32, 96) 0 activation[0][0] __________________________________________________________________________________________________ activation_1 (Activation) (None, 32, 32, 32) 0 batch_normalization_1[0][0] __________________________________________________________________________________________________ activation_2 (Activation) (None, 32, 32, 32) 0 batch_normalization_2[0][0] __________________________________________________________________________________________________ activation_3 (Activation) (None, 32, 32, 32) 0 batch_normalization_3[0][0] __________________________________________________________________________________________________ conv2d_4 (Conv2D) (None, 32, 32, 32) 3104 max_pooling2d[0][0] __________________________________________________________________________________________________ concatenate (Concatenate) (None, 32, 32, 128) 0 activation_1[0][0] activation_2[0][0] activation_3[0][0] conv2d_4[0][0] __________________________________________________________________________________________________ conv2d_5 (Conv2D) (None, 32, 32, 32) 4128 concatenate[0][0] __________________________________________________________________________________________________ conv2d_6 (Conv2D) (None, 32, 32, 48) 55344 concatenate[0][0] __________________________________________________________________________________________________ conv2d_7 (Conv2D) (None, 32, 32, 48) 153648 concatenate[0][0] __________________________________________________________________________________________________ batch_normalization_4 (BatchNor (None, 32, 32, 32) 128 conv2d_5[0][0] __________________________________________________________________________________________________ batch_normalization_5 (BatchNor (None, 32, 32, 48) 192 conv2d_6[0][0] __________________________________________________________________________________________________ batch_normalization_6 (BatchNor (None, 32, 32, 48) 192 conv2d_7[0][0] __________________________________________________________________________________________________ max_pooling2d_1 (MaxPooling2D) (None, 32, 32, 128) 0 concatenate[0][0] __________________________________________________________________________________________________ activation_4 (Activation) (None, 32, 32, 32) 0 batch_normalization_4[0][0] __________________________________________________________________________________________________ activation_5 (Activation) (None, 32, 32, 48) 0 batch_normalization_5[0][0] __________________________________________________________________________________________________ activation_6 (Activation) (None, 32, 32, 48) 0 batch_normalization_6[0][0] __________________________________________________________________________________________________ conv2d_8 (Conv2D) (None, 32, 32, 32) 4128 max_pooling2d_1[0][0] __________________________________________________________________________________________________ concatenate_1 (Concatenate) (None, 32, 32, 160) 0 activation_4[0][0] activation_5[0][0] activation_6[0][0] conv2d_8[0][0] __________________________________________________________________________________________________ conv2d_9 (Conv2D) (None, 15, 15, 80) 115280 concatenate_1[0][0] __________________________________________________________________________________________________ batch_normalization_7 (BatchNor (None, 15, 15, 80) 320 conv2d_9[0][0] __________________________________________________________________________________________________ activation_7 (Activation) (None, 15, 15, 80) 0 batch_normalization_7[0][0] __________________________________________________________________________________________________ max_pooling2d_2 (MaxPooling2D) (None, 15, 15, 160) 0 concatenate_1[0][0] __________________________________________________________________________________________________ concatenate_2 (Concatenate) (None, 15, 15, 240) 0 activation_7[0][0] max_pooling2d_2[0][0] __________________________________________________________________________________________________ conv2d_10 (Conv2D) (None, 15, 15, 112) 26992 concatenate_2[0][0] __________________________________________________________________________________________________ conv2d_11 (Conv2D) (None, 15, 15, 48) 103728 concatenate_2[0][0] __________________________________________________________________________________________________ conv2d_12 (Conv2D) (None, 15, 15, 32) 192032 concatenate_2[0][0] __________________________________________________________________________________________________ batch_normalization_8 (BatchNor (None, 15, 15, 112) 448 conv2d_10[0][0] __________________________________________________________________________________________________ batch_normalization_9 (BatchNor (None, 15, 15, 48) 192 conv2d_11[0][0] __________________________________________________________________________________________________ batch_normalization_10 (BatchNo (None, 15, 15, 32) 128 conv2d_12[0][0] __________________________________________________________________________________________________ max_pooling2d_3 (MaxPooling2D) (None, 15, 15, 240) 0 concatenate_2[0][0] __________________________________________________________________________________________________ activation_8 (Activation) (None, 15, 15, 112) 0 batch_normalization_8[0][0] __________________________________________________________________________________________________ activation_9 (Activation) (None, 15, 15, 48) 0 batch_normalization_9[0][0] __________________________________________________________________________________________________ activation_10 (Activation) (None, 15, 15, 32) 0 batch_normalization_10[0][0] __________________________________________________________________________________________________ conv2d_13 (Conv2D) (None, 15, 15, 48) 11568 max_pooling2d_3[0][0] __________________________________________________________________________________________________ concatenate_3 (Concatenate) (None, 15, 15, 240) 0 activation_8[0][0] activation_9[0][0] activation_10[0][0] conv2d_13[0][0] __________________________________________________________________________________________________ conv2d_14 (Conv2D) (None, 15, 15, 96) 23136 concatenate_3[0][0] __________________________________________________________________________________________________ conv2d_15 (Conv2D) (None, 15, 15, 64) 138304 concatenate_3[0][0] __________________________________________________________________________________________________ conv2d_16 (Conv2D) (None, 15, 15, 32) 192032 concatenate_3[0][0] __________________________________________________________________________________________________ batch_normalization_11 (BatchNo (None, 15, 15, 96) 384 conv2d_14[0][0] __________________________________________________________________________________________________ batch_normalization_12 (BatchNo (None, 15, 15, 64) 256 conv2d_15[0][0] __________________________________________________________________________________________________ batch_normalization_13 (BatchNo (None, 15, 15, 32) 128 conv2d_16[0][0] __________________________________________________________________________________________________ max_pooling2d_4 (MaxPooling2D) (None, 15, 15, 240) 0 concatenate_3[0][0] __________________________________________________________________________________________________ activation_11 (Activation) (None, 15, 15, 96) 0 batch_normalization_11[0][0] __________________________________________________________________________________________________ activation_12 (Activation) (None, 15, 15, 64) 0 batch_normalization_12[0][0] __________________________________________________________________________________________________ activation_13 (Activation) (None, 15, 15, 32) 0 batch_normalization_13[0][0] __________________________________________________________________________________________________ conv2d_17 (Conv2D) (None, 15, 15, 32) 7712 max_pooling2d_4[0][0] __________________________________________________________________________________________________ concatenate_4 (Concatenate) (None, 15, 15, 224) 0 activation_11[0][0] activation_12[0][0] activation_13[0][0] conv2d_17[0][0] __________________________________________________________________________________________________ conv2d_18 (Conv2D) (None, 15, 15, 80) 18000 concatenate_4[0][0] __________________________________________________________________________________________________ conv2d_19 (Conv2D) (None, 15, 15, 80) 161360 concatenate_4[0][0] __________________________________________________________________________________________________ conv2d_20 (Conv2D) (None, 15, 15, 32) 179232 concatenate_4[0][0] __________________________________________________________________________________________________ batch_normalization_14 (BatchNo (None, 15, 15, 80) 320 conv2d_18[0][0] __________________________________________________________________________________________________ batch_normalization_15 (BatchNo (None, 15, 15, 80) 320 conv2d_19[0][0] __________________________________________________________________________________________________ batch_normalization_16 (BatchNo (None, 15, 15, 32) 128 conv2d_20[0][0] __________________________________________________________________________________________________ max_pooling2d_5 (MaxPooling2D) (None, 15, 15, 224) 0 concatenate_4[0][0] __________________________________________________________________________________________________ activation_14 (Activation) (None, 15, 15, 80) 0 batch_normalization_14[0][0] __________________________________________________________________________________________________ activation_15 (Activation) (None, 15, 15, 80) 0 batch_normalization_15[0][0] __________________________________________________________________________________________________ activation_16 (Activation) (None, 15, 15, 32) 0 batch_normalization_16[0][0] __________________________________________________________________________________________________ conv2d_21 (Conv2D) (None, 15, 15, 32) 7200 max_pooling2d_5[0][0] __________________________________________________________________________________________________ concatenate_5 (Concatenate) (None, 15, 15, 224) 0 activation_14[0][0] activation_15[0][0] activation_16[0][0] conv2d_21[0][0] __________________________________________________________________________________________________ conv2d_22 (Conv2D) (None, 15, 15, 48) 10800 concatenate_5[0][0] __________________________________________________________________________________________________ conv2d_23 (Conv2D) (None, 15, 15, 96) 193632 concatenate_5[0][0] __________________________________________________________________________________________________ conv2d_24 (Conv2D) (None, 15, 15, 32) 179232 concatenate_5[0][0] __________________________________________________________________________________________________ batch_normalization_17 (BatchNo (None, 15, 15, 48) 192 conv2d_22[0][0] __________________________________________________________________________________________________ batch_normalization_18 (BatchNo (None, 15, 15, 96) 384 conv2d_23[0][0] __________________________________________________________________________________________________ batch_normalization_19 (BatchNo (None, 15, 15, 32) 128 conv2d_24[0][0] __________________________________________________________________________________________________ max_pooling2d_6 (MaxPooling2D) (None, 15, 15, 224) 0 concatenate_5[0][0] __________________________________________________________________________________________________ activation_17 (Activation) (None, 15, 15, 48) 0 batch_normalization_17[0][0] __________________________________________________________________________________________________ activation_18 (Activation) (None, 15, 15, 96) 0 batch_normalization_18[0][0] __________________________________________________________________________________________________ activation_19 (Activation) (None, 15, 15, 32) 0 batch_normalization_19[0][0] __________________________________________________________________________________________________ conv2d_25 (Conv2D) (None, 15, 15, 32) 7200 max_pooling2d_6[0][0] __________________________________________________________________________________________________ concatenate_6 (Concatenate) (None, 15, 15, 208) 0 activation_17[0][0] activation_18[0][0] activation_19[0][0] conv2d_25[0][0] __________________________________________________________________________________________________ conv2d_26 (Conv2D) (None, 15, 15, 112) 23408 concatenate_6[0][0] __________________________________________________________________________________________________ conv2d_27 (Conv2D) (None, 15, 15, 48) 89904 concatenate_6[0][0] __________________________________________________________________________________________________ conv2d_28 (Conv2D) (None, 15, 15, 32) 166432 concatenate_6[0][0] __________________________________________________________________________________________________ batch_normalization_20 (BatchNo (None, 15, 15, 112) 448 conv2d_26[0][0] __________________________________________________________________________________________________ batch_normalization_21 (BatchNo (None, 15, 15, 48) 192 conv2d_27[0][0] __________________________________________________________________________________________________ batch_normalization_22 (BatchNo (None, 15, 15, 32) 128 conv2d_28[0][0] __________________________________________________________________________________________________ max_pooling2d_7 (MaxPooling2D) (None, 15, 15, 208) 0 concatenate_6[0][0] __________________________________________________________________________________________________ activation_20 (Activation) (None, 15, 15, 112) 0 batch_normalization_20[0][0] __________________________________________________________________________________________________ activation_21 (Activation) (None, 15, 15, 48) 0 batch_normalization_21[0][0] __________________________________________________________________________________________________ activation_22 (Activation) (None, 15, 15, 32) 0 batch_normalization_22[0][0] __________________________________________________________________________________________________ conv2d_29 (Conv2D) (None, 15, 15, 48) 10032 max_pooling2d_7[0][0] __________________________________________________________________________________________________ concatenate_7 (Concatenate) (None, 15, 15, 240) 0 activation_20[0][0] activation_21[0][0] activation_22[0][0] conv2d_29[0][0] __________________________________________________________________________________________________ conv2d_30 (Conv2D) (None, 7, 7, 96) 207456 concatenate_7[0][0] __________________________________________________________________________________________________ batch_normalization_23 (BatchNo (None, 7, 7, 96) 384 conv2d_30[0][0] __________________________________________________________________________________________________ activation_23 (Activation) (None, 7, 7, 96) 0 batch_normalization_23[0][0] __________________________________________________________________________________________________ max_pooling2d_8 (MaxPooling2D) (None, 7, 7, 240) 0 concatenate_7[0][0] __________________________________________________________________________________________________ concatenate_8 (Concatenate) (None, 7, 7, 336) 0 activation_23[0][0] max_pooling2d_8[0][0] __________________________________________________________________________________________________ conv2d_31 (Conv2D) (None, 7, 7, 176) 59312 concatenate_8[0][0] __________________________________________________________________________________________________ conv2d_32 (Conv2D) (None, 7, 7, 160) 484000 concatenate_8[0][0] __________________________________________________________________________________________________ conv2d_33 (Conv2D) (None, 7, 7, 96) 806496 concatenate_8[0][0] __________________________________________________________________________________________________ batch_normalization_24 (BatchNo (None, 7, 7, 176) 704 conv2d_31[0][0] __________________________________________________________________________________________________ batch_normalization_25 (BatchNo (None, 7, 7, 160) 640 conv2d_32[0][0] __________________________________________________________________________________________________ batch_normalization_26 (BatchNo (None, 7, 7, 96) 384 conv2d_33[0][0] __________________________________________________________________________________________________ max_pooling2d_9 (MaxPooling2D) (None, 7, 7, 336) 0 concatenate_8[0][0] __________________________________________________________________________________________________ activation_24 (Activation) (None, 7, 7, 176) 0 batch_normalization_24[0][0] __________________________________________________________________________________________________ activation_25 (Activation) (None, 7, 7, 160) 0 batch_normalization_25[0][0] __________________________________________________________________________________________________ activation_26 (Activation) (None, 7, 7, 96) 0 batch_normalization_26[0][0] __________________________________________________________________________________________________ conv2d_34 (Conv2D) (None, 7, 7, 96) 32352 max_pooling2d_9[0][0] __________________________________________________________________________________________________ concatenate_9 (Concatenate) (None, 7, 7, 528) 0 activation_24[0][0] activation_25[0][0] activation_26[0][0] conv2d_34[0][0] __________________________________________________________________________________________________ conv2d_35 (Conv2D) (None, 7, 7, 176) 93104 concatenate_9[0][0] __________________________________________________________________________________________________ conv2d_36 (Conv2D) (None, 7, 7, 160) 760480 concatenate_9[0][0] __________________________________________________________________________________________________ conv2d_37 (Conv2D) (None, 7, 7, 96) 1267296 concatenate_9[0][0] __________________________________________________________________________________________________ batch_normalization_27 (BatchNo (None, 7, 7, 176) 704 conv2d_35[0][0] __________________________________________________________________________________________________ batch_normalization_28 (BatchNo (None, 7, 7, 160) 640 conv2d_36[0][0] __________________________________________________________________________________________________ batch_normalization_29 (BatchNo (None, 7, 7, 96) 384 conv2d_37[0][0] __________________________________________________________________________________________________ max_pooling2d_10 (MaxPooling2D) (None, 7, 7, 528) 0 concatenate_9[0][0] __________________________________________________________________________________________________ activation_27 (Activation) (None, 7, 7, 176) 0 batch_normalization_27[0][0] __________________________________________________________________________________________________ activation_28 (Activation) (None, 7, 7, 160) 0 batch_normalization_28[0][0] __________________________________________________________________________________________________ activation_29 (Activation) (None, 7, 7, 96) 0 batch_normalization_29[0][0] __________________________________________________________________________________________________ conv2d_38 (Conv2D) (None, 7, 7, 96) 50784 max_pooling2d_10[0][0] __________________________________________________________________________________________________ concatenate_10 (Concatenate) (None, 7, 7, 528) 0 activation_27[0][0] activation_28[0][0] activation_29[0][0] conv2d_38[0][0] __________________________________________________________________________________________________ average_pooling2d (AveragePooli (None, 1, 1, 528) 0 concatenate_10[0][0] __________________________________________________________________________________________________ dropout (Dropout) (None, 1, 1, 528) 0 average_pooling2d[0][0] __________________________________________________________________________________________________ flatten (Flatten) (None, 528) 0 dropout[0][0] __________________________________________________________________________________________________ dense (Dense) (None, 10) 5290 flatten[0][0] __________________________________________________________________________________________________ activation_30 (Activation) (None, 10) 0 dense[0][0] ================================================================================================== Total params: 5,963,658 Trainable params: 5,959,050 Non-trainable params: 4,608 __________________________________________________________________________________________________ None

vii) Decay Parameter

Initialize the number of epochs and the initial learning rate. Next, create a polynomial decay function that will compute a dynamic learning rate. It will change/decay with every epoch in a polynomial manner.

NUM_EPOCHS = 50 INIT_LR = 5e-3 def poly_decay(epoch): maxEpochs = NUM_EPOCHS baseLR = INIT_LR power = 1.0 alpha = baseLR * (1 - (epoch / float(maxEpochs))) ** power return alpha

viii) Preparing the Data

Load the CIFAR dataset as arrays in the float data type.

- Compute the mean of each train and test dataset and subtract it. So that the pixel values are normalized.

- Use the label binarizer to convert the labels from integers to vectors.

- Use the image data generator to create variation in data.

((trainX, trainY), (testX, testY)) = cifar10.load_data()

trainX = trainX.astype("float")

testX = testX.astype("float")

# Step 1

mean = np.mean(trainX, axis=0)

trainX -= mean

testX -= mean

# Step 2

lb = LabelBinarizer()

trainY = lb.fit_transform(trainY)

testY = lb.transform(testY)

# Step 3

aug = ImageDataGenerator(width_shift_range=0.1,height_shift_range=0.1, horizontal_flip=True,fill_mode="nearest")

ix) Compiling and Fitting the Model

Define the callbacks and optimizers for the model. Create a model instance with arguments of width, height, depth, and classes(32,32,3,10).

- Compile the model with categorical cross-entropy loss and set the metric to accuracy.

- Fit the model with the data generator, validation data, steps per epoch, no of epochs, and callbacks.

callbacks=[LearningRateScheduler(poly_decay)]

opt = SGD(lr=INIT_LR, momentum=0.9)

model = GoogleNet(width=32, height=32, depth=3, classes=10)

# Step 1

model.compile(loss="categorical_crossentropy", optimizer=opt,metrics=["accuracy"])

# Step 2

model.fit(aug.flow(trainX, trainY, batch_size=64),validation_data=(testX, testY), steps_per_epoch=len(trainX) // 64,epochs=NUM_EPOCHS, callbacks=callbacks, verbose=1)

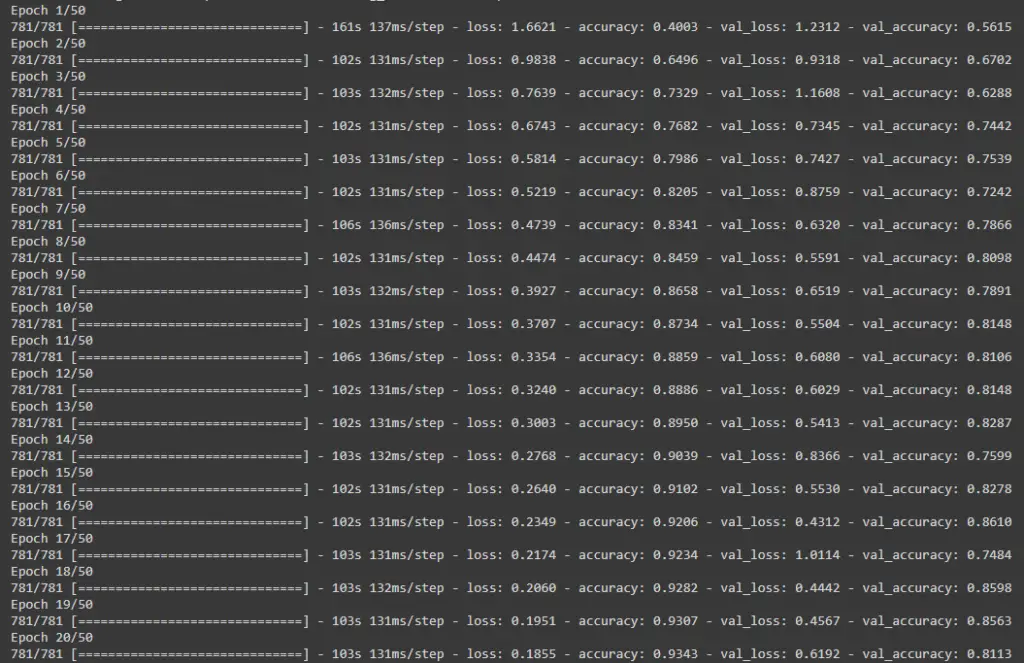

Initial epoch output:

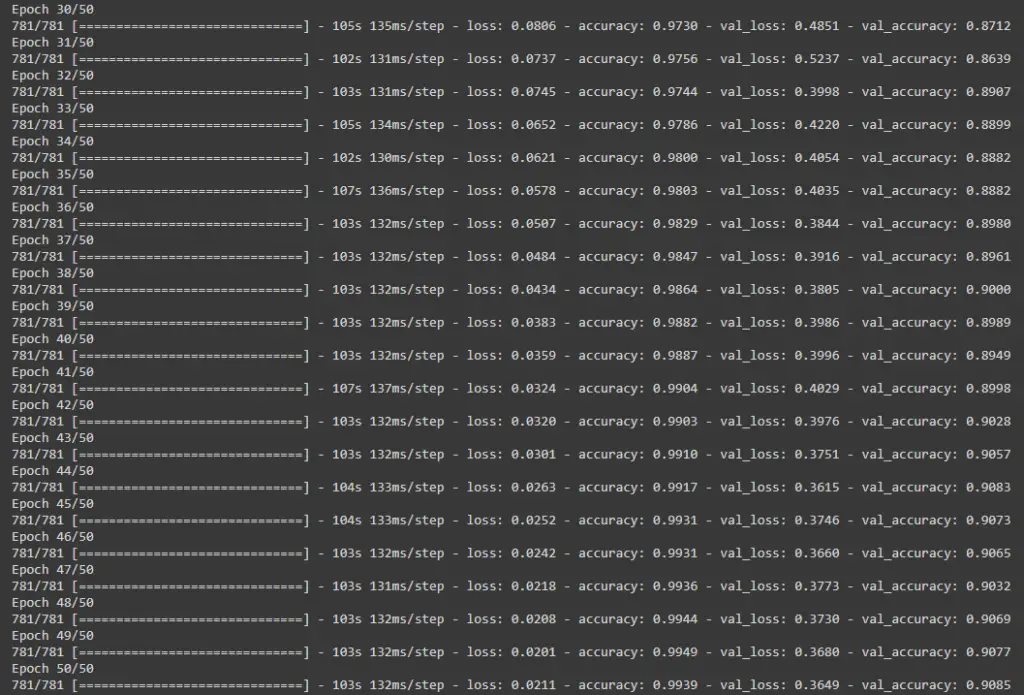

Final epoch output:

# Final epoch

Epoch 50/50 781/781 [==============================] - 103s 132ms/step - loss: 0.0211 - accuracy: 0.9939 - val_loss: 0.3649 - val_accuracy: 0.9085

x) Evaluating the Model

Use the below code to checks the test accuracy. It comes out to be approximately 90 percent. (Top 20 for the CIFAR-10 dataset on kaggle)

score=model.evaluate(testX,testY)

print('Test Score=',score[0])

print('Test Accuracy=',score[1])

Output:

Test Score: 0.3751 Test Accuracy: 0.9077

Inference

Below are some of the inference results by using our trained GoogleNet model.

Conclusion

Coming to the end of this tutorial, where we did GoogleNet implementation in Keras. We did the training using the CIFAR-10 dataset, but you may use your own datsets fpr this purpose.

References:

- For more details on GoogleNet architecture, you may check the official paper by Christian Szegedy et al Going Deeper with convolutions (2015)

- Special regards to Adrian Rosebrock for providing an extended look at the GoogleNet model.

-

I am a machine learning enthusiast with a keen interest in web development. My main interest is in the field of computer vision and I am fascinated with all things that comprise making computers learn and love to learn new things myself.

View all posts