Introduction

Environment is the fundamental element in the reinforcement learning problem. It is very important to have the right understanding of the underlying environment with which the RL agent is supposed to interact. This helps us to come up with the right design and learning technique for the agent. In this article, we will understand the concept of environment in reinforcement learning and its action space. We will then see what are the various types of environments that may occur in the reinforcement learning problem.

What is Environment in Reinforcement Learning?

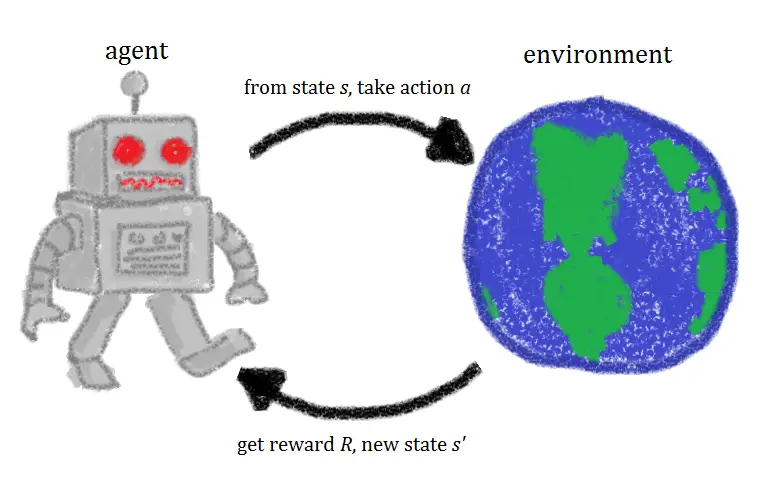

In reinforcement learning, Environment is the Agent’s world in which it lives and interacts. The agent can interact with the environment by performing some action but cannot influence the rules or dynamics of the environment by those actions. This means if humans were to be the agent in the earth’s environments then we are confined with the laws of physics of the planet. We can interact with the environment with our actions but cannot change the physics of our planet.

When an agent performs an action in the environment, the environment returns a new state of the environment making the agent move to this new state. The environment also sends a Reward to the agent which is a scalar value that acts as feedback for the agent whether its action was good or bad.

Let us understand this intuitively with the help of Mario Game. In this case, Mario is the agent and its world is the Environment. Whenever Mario performs an action of moving Left or Right or Jumping with Speed the environment renders new frames which is nothing but a new state, and Mario ends up moving to this new state.

Imagine Mario attempting the jump over the pit, if it does not do it successfully, the environment takes Mario to the new state which is the bottom of the pit, and returns the negative reward to penalize it. If it goes to the other side of the pit, the environment will give a positive reward to signify that his actions were correct. (What will be specific values of positive or negative reward actually depends on how you model your environment)

Environment Action Space in Reinforcement Learning

Action space is a set of actions that are permissible for the agent in a given environment. Each environment is associated with its own action space.

For example in Pac-Man, the action space would be [Left, Right, Up, Down], and in the Atari Wall Breaker game, the action space would only be [Left, Right].

The action space can be of the following types –

1. Discrete Action Space

In discrete action space, all the actions are discrete in nature. For example, Pac-Man has a discrete action space of [Left, Right, Up, Down].

2. Continuos Action Space

In continuous action space, the actions are continuous in nature. For example, the self-driving car environment has a continuous action space of [steering wheel rotation, velocity].

Different Types Of Environments in Reinforcement Learning

Different types of environments in reinforcement learning can be categorized as follows –

1. Deterministic vs Stochastic Environment

Deterministic Environment

In a deterministic environment, the next state of the environment can always be determined based on the current state and the agent’s action.

For example, while driving a car if the agent performs an action of steering left, the car will move left only. In a perfect world, it will not happen that you steer the car left but it moves right, it will always move left and this is deterministic.

Stochastic Environment

In a stochastic reinforcement learning environment, we cannot always determine the next state of the environment from the current state by performing a certain action.

For example, suppose our agent’s world of driving a car is not perfect. When an agent applies a break there is a small probability that the break may fail and the car does not stop. Similarly, when the agent tries to accelerate the car, it may just stop with a small probability. So in this environment, the next state of the car cannot always be determined based on its current state and the agent’s action.

2. Single Agent vs Multi-Agent Environment

Single Agent Environment

In a single agent environment, there is only one agent that exists and interacts with the environment.

For example, if there is only one agent that is driving the car from point A to point B then it is a single agent environment.

Multi-Agent Environment

In a multi-agent environment, there are more than one agents that are interacting with the environment.

So in our example, if there are multiple cars, that are being controlled by different agents then it is a multi-agent environment.

3. Discrete vs Continuous Environment

Discrete Environment

In a discrete environment, the action space of the environment is discrete in nature.

For example, consider a grid world environment where the available action space is discrete like [left, right, up, down].

Continuous Environment

In a continuous environment, the action space of the environment is continuous in nature.

Our self-driving car world is an example of a continuous environment. Here the action space of the environment consists of continuous action like car’s speed, acceleration, steering rotation, etc.

4. Episodic vs Sequential Environment

Episodic Environment

In an episodic environment, the agent’s actions are confined to the particular episode only and not on any previous actions.

For example, in a game of cups and ball where the task is to guess the correct cup out of three cups having the ball, the agent’s action is independent of its earlier attempts or episodes.

Sequential Environment

In a sequential environment, the agent’s actions are connected with the previous actions it took.

For example, in Chess, the current state of the game was reached due to all the game-playing actions the agent took. And all the future actions in the game are also going to be dependent on its previous history of sequences.

5. Fully Observable vs Partially Observable Environment

Fully Observable Environment

In a fully observable environment, the agent is always aware of the complete state of the environment at any given point in time.

In the game of chess, the agent can always see the complete position of itself and its opponent on the board. Hence it is a fully observable environment.

Partially Observable Environment

In a partially observable environment, the agent cannot always see the complete state of the environment at any given point in time.

In the game of poker, the agent cannot see the hands of the opponent for the most part of the game, and hence it is a partially observable environment.

Conclusion

Hope you now understand what is environment in reinforcement learning. In this article we covered the concepts of environment, its action space. Then we saw various types of environments that can be found in reinforcement learning problems.